Posts filed under "History"

August 5, 2021

August 28, 2019

The Unum Pattern

Warning: absurd technical geekery ahead — even compared to the kinds of things I normally talk about. Seriously. But things will be back to my normal level of still-pretty-geeky-but-basically-approachable soon enough.

[Historical note: This post has been a long time in the making — the roots of the fundamental idea being described here go back to the original Habitat system (although we didn’t realize it at the time). It describes a software design pattern for distributed objects — which we call the “unum” — that I and some of my co-conspirators at various companies have used to good effect in many different systems, but which is still obscure even among the people who do the kinds of things I do. In particular, I’ve described this stuff in conversation with lots of people over the years and a few of them have published descriptions of what they understood, but their writeups haven’t, to my sensibilities at least, quite captured the idea as I conceive of it. But I’ve only got myself to blame for this as I’ve been lax in actually writing down my own understanding of the idea, for all the usual reasons one has for not getting around to doing things one should be doing.]

Consider a distributed, multi-participant virtual world such as Habitat or one of its myriad descendants. This world is by its nature very object oriented, but not in quite the same way that we mean when we talk about, for example, object oriented programming. This is confusing because the implementation is, itself, very object oriented, in exactly the object oriented programming sense.

Imagine being in this virtual world somewhere, say, in a room in a building in downtown Populopolis. And there is a table in the room and sitting on the table is a teacup. Well, I said you were in the virtual world, but you’re not really in it, your avatar is in it, and you are just interacting with it through the mediation of some kind of client software running on your local computer (or perhaps these days on your phone), which is in turn communicating over a network connection to a server somewhere. So the question arises, where is the teacup, really? Certainly there is a representation of the teacup inside your local computer, but there is also a representation of the teacup inside the server. And if I am in the room with you (well, my avatar, but that’s not important right now), then there’s also a representation of the teacup inside my local computer. So is the teacup in your computer or in my computer or in the server? One reasonable answer is “all of the above”, but in my experience a lot of technical people will say that it’s “really” in the server, since they regard the server as the source of truth. But the correct answer is that the teacup is on a table in a room inside a building in Populopolis. The teacup occupies a different plane of existence from the software objects that are used to realize it. It has an objective identity of its own — if you and I each refer to it, we are talking about the same teacup — but this identity is entirely distinct from the identities of any of those software objects. And it has such an identity, because even though it’s on a different plane there still needs to be some kind of actual identifier that can be used in the communications protocols that the clients and the server use to talk to each other, so that they can refer to the teacup when they describe their manipulations of it and the things that happen to it.

You might distinguish between these two senses of “object” by using phrases with modifiers; for example, you might say “world object” versus “OOP object”, and in fact that is what we did for several years. However, this terminology made it easy to fall back on the shorthand of just talking about “objects” when it was clear from context which of these two meanings of “object” you meant. Of course, it often turned out that this context wasn’t actually clear to somebody in the conversation, with confusion and misunderstanding as the common result. So after a few false starts at crafting alternative jargon we settled on using the term “object” to always refer to an OOP object in an implementation and the term “unum”, from the latin meaning a single thing, to refer to a world object. This term has worked well for us, aside from endless debates about whether the plural is properly “una” or “unums” (my opinion is: take your pick; people will know what you mean either way).

Of course, we still have to explain the relationship between the unum and its implementation. The objects (using that word from now on according to our revised terminology) that realize the unum do live at particular memory addresses in particular computers. We think of the unum, in contrast, as having a distributed existence. We speak of the portion of the unum that resides in a particular machine as a “presence”. So to go back to the example I started with, the teacup unum has a presence on the server and presences on each of our client machines.

(As an aside, for the first few years of trying to explain to people how Habitat worked, I would sometimes find myself in confused discussions about “distributed objects”, by which the people with whom I was talking meant individual objects that were located at different places on the network, whereas I meant objects that were themselves distributed entities. I didn’t at first realize these conversations were at cross purposes because the model we had developed for Habitat seemed natural and obvious to me at the time — how else could it possibly work, after all? — and it took me a while to twig to the fact that other people conceived of things in a very different way. Another reason for introducing a new word.)

In the teacup example, we have a server presence and some number of client presences. The client presences are concerned with presenting the unum to their local users while the server presence is concerned with keeping track of that portion of the unum’s state which all the users share. Phrased this way, many people find the presence abstraction very natural, but it sometimes leads them to jump to conclusions about what is going on, resulting in still more confusion and conversation at cross purposes. People who implement distributed systems often build on top of frameworks that provide services like data replication, and so it is easy to fall into thinking of the server presence as the “real” version of the unum and the client presences as shadow copies that maintain a (perhaps slightly out of date) cached representation of the true state. Or thinking of the client presences as proxies of some kind. This is not exactly wrong, in the sense that you can certainly build systems that work this way, as many distributed applications — possibly including most commercially successful MMOs — actually do. However, it’s not the model I’m describing here.

One problem with data replication based schemes is that they don’t gracefully accommodate use cases that require some information be withheld from some participants (it’s not that you absolutely can’t do this, but it’s awkward and cuts against the grain). It’s not just that the server is authoritative about shared state, but also that the server is allowed to take into account private state that the clients don’t have, in order to determine how the shared state changes over time and in response to events.

A server presence and a client presence are not doing the same job. The fundamental underlying concept that presences embody is not some notion of master vs. replica, but division of labor. Each has distinct responsibilities in the joint work of being the unum. Each is authoritative about different aspects of the unum’s existence (and typically each will maintain private state of their own that they do not share with the other). In the case of the client-server model in our example, the client presence manages client-side concerns such as the state of the display. It worries about things like 3D rendering, animation sequencing, and presenting controls to the human user to manipulate the teacup with. The server keeps track of things like the physical model of the teacup within the virtual world. It worries about the interactions between the teacup and the table, for example. Each presence knows things that are none of the other presence’s business, either because that information is simply outside the scope of what the other presence does (such as the current animation frame or the force being applied to the table) or because it’s something the other presence is not supposed to know (such as the server knowing that this particular teacup has a hidden flaw that will cause it to break into several pieces if you pour hot water into it, revealing a secret message inscribed on the edges where it comes apart). The various different client presences may also have information they do not share with each other for reasons of function or privacy. For example, one client might do 3D rendering in a GUI window while another presents only a textual description with a command line interface. Perhaps the server has revealed the secret message hidden in the teacup to my client (and to none of the others) because I possess a magic amulet that lets me see such things.

We can loosely talk about “sending a message to an unum”, but the sending of messages is an OOP concept rather than a world model concept. Sending a message to an unum (which is not an object) is really sending a message to some presence of that unum (since a presence is an object). This means that to designate the target of such a message, the address needs two components: (1) the identity of the unum and (2) an indicator of which presences of that unum you want to talk to.

In the systems I’ve implemented (including Habitat, but also, perhaps more usefully for anyone who wants to play with these ideas, its Nth generation descendant, the Elko server framework), the objects on a machine within a given application all run inside a common execution environment — what we now call a “vat”. Cross-machine messages are transported over communications channels established between vats. In such a system, from a vat’s perspective the external presences of a given unum (that is, presences other than the local one) are thus in one-to-one correspondence with the message channels to the other vats that host those presences, so you can designate a presence by indicating the channel that leads to its vat. (For those presences you can talk to, anyway: the unum model does not require that a presence be able to directly communicate with all the other presences. For example, in the case of a Habitat or Elko-style system such as I am describing here, clients don’t talk to other clients, but only to the server.)

Here we encounter an asymmetry between client and server that is another frequent source of confusion. From the client’s perspective, there is only one open message channel — the one that talks to the server — and so the only other unum presence a client knows about is the server presence. In this situation, the identifier of the unum is sufficient to determine where a message should be sent, since there is only one possibility. Developers working on client-side code don’t have to distinguish between “send a message to the unum” and “send a message to the server presence of the unum”. Consequently, they can program to the conventional model of “send messages to objects on the other end of the connection” and everything works more or less the way they are used to. On the server side, however, things get more interesting. Here we encounter something that people accustomed to developing in the web world have usually never experienced: server code that is simultaneously in communication with multiple clients. This is where working with the unum pattern suddenly becomes very different, and also where it acquires much of its power and usefulness.

In the client-server unum model, the server can communicate with all of an unum’s client presences. Although a given message could be sent to any of them, or to all of them, or to any arbitrary subset of them, in practice we’ve found that a small number of messaging patterns suffice to capture everything we’ve wanted to do. More specifically, there are four patterns that in our experience are repeatedly useful, to the point where we’ve codified these in the messaging libraries we use to implement distributed applications. We call these four messaging patterns Reply, Neighbor, Broadcast, and Point, all framed in the context of processing some message that has been received by the server presence from one of the clients; among other things, this context identifies which client it was who sent it. A Reply message is directed back to the client presence that sent the message the server is processing. A Point message is directed to a specific client presence chosen by the server; this is similar to a Reply message except that the recipient is explicit rather than implied and could be any client regardless of context. A Broadcast message is sent to all the client presences, while a Neighbor message is directed to all the client presences except the sender of the message that the server is processing. The latter pattern is the one that people coming to the unum model for the first time tend to find weird; I’ll explain its use in a moment.

(Some people jump to the idea these four are all generalizations of the Point message, thinking it a good primitive to actually implement the other three, but in the systems we’ve built the messaging primitive is a lower level construct that handles fanout and routing for one or many recipients with a single, common mechanism so that we don’t have to multiply buffer the message if it has more than one target. In practice, we use Point messages rather rarely; in fact, using a Point message usually indicates that you’re doing something odd.)

The reason for there being multiple client presences in the first place is that the presences all share a common context in which the actions of one client can affect the others. This is in contrast to the classic web model in which each client is engaged in its own one-on-one dialog with the server, pretty much unrelated to any simultaneous dialogs the server might be having with other clients that just happen to be connected to it at the same time. However, the multiple-clients-in-a-shared-context model is a very good match for the kinds of online game and virtual world applications for which it was originated (it’s not that you can’t realize those kinds of applications using the web model, but, like the comment I made above about data replication, it’s cutting against the grain — it’s not a natural thing for web servers to do).

Actions initiated by a client typically take the form of a request message from that client to an unum’s server presence. The server’s handler for this message takes whatever actions are appropriate, then sends a Reply message back informing the requestor of the results of the action, along with a Neighbor message to the other client presences informing them of what just happened. The Reply and Neighbor messages generally have different payloads since the requestor typically already knows what’s going on and often merely needs a status result, whereas the other clients need to be informed of the action de novo. It is also common for the requestor to be a client that is in some privileged role with respect to the unum (perhaps the sending client is associated with the unum’s owner or holder, for example), and thus entitled to be given additional information in the Reply that is not shared with the other clients.

Actions initiated by the server, on the other hand, typically will be communicated to all the clients using the Broadcast pattern, since in this case none of the clients start out knowing what’s going on and thus all require the same information. The fact that the server can autonomously initiate actions is another difference between these kinds of systems and traditional web applications (server initiated actions are now supported by HTTP/2, albeit in a strange, inside out kind of way, but as far as I can tell they have yet to become part of the typical web application developer’s toolkit).

A direction that some people immediately want to go is to attempt to reduce the variety of messaging patterns by treating the coordination among presences as a data replication problem, which I’ve already said is not what we’re doing here. At the heart of this idea is a sense that you might make the development of presences simpler by reducing the differences between them — that rather than developing a client presence and a server presence as separate pieces of code, you could have a single implementation that will serve both ends of the connection (I can’t count the number of times I’ve seen game companies try to turn single player games into multiplayer games this way, and the results are usually pretty awful). Alternatively, one could implement one end and have the other be some kind of standardized one-side-fits-all thing that has no type-specific logic of its own. One issue with either of these approaches is how you handle the asymmetric information patterns inherent in the world model, but another is the division of labor itself. Systems built on the unum pattern tend to have message interfaces that are fundamentally about behavior rather than about data. That is, what is important about an unum is what it does. Habitat’s design was driven to a very large degree by the need for it to work effectively over 300 and 1200 baud connections. Behavioral protocols are vastly more effective at economizing on bandwidth than data based protocols. One way to think of this is as a form of highly optimized, knowledge-based data compression: if you already know what the possible actions are that can transform the state of something, a parameterized operation can often be represented much more compactly than can all state that is changed as a consequence of the action’s execution. In some sense, the unum pattern is about as anti-REST as you can be.

One idea that I think merits a lot more exploration is this: given the fundamental idea that an unum’s presences are factored according to a division of labor, are there other divisions of labor besides client-server that might be useful? I have a strong intuition that the answer is yes, but I don’t as yet have a lot of justification for that intuition. One obvious pattern to look into is a pure peer-to-peer model, where all presences are equally authoritative and the “true” state of reality is determined by some kind of distributed consensus mechanism. This is a notion we tinkered with a little bit at Electric Communities, but not to any particular conclusion. For the moment, this remains a research question.

One of the things we did do at Electric Communities was build a system where the client-server distinction was on a per-unum basis, rather than “client” and “server” being roles assigned to the two ends of a network connection. To return to our example of a teacup on a table in a room, you might have the server presence of the teacup be on machine A, with machines B and C acting as clients, while machine B is the server for the table and machine C is the server for the room. Obviously, this can only happen if there is N-way connectivity among all the participants, in contrast to the traditional two-way connectivity we use in the web, though whether this is realized via pairwise connections to a central routing hub or as a true crossbar is left as an implementation detail. This kind of per-unum relationship typing was one of the keys to our strategy for making our framework support a world that was both decentralized and openly extensible. (Continuing with the question raised in the last paragraph, an obvious generalization would be to allow the division of labor scheme itself vary from one unum to another. This suggests that a system whose unums are all initially structured according to the client-server model could still potentially act as a test bed for different schemes for dividing up functionality over the network.)

Having the locus of authoritativeness with respect to shared state vary from one unum to another opens up lots of interesting questions about the semantics of inter-unum relationships. In particular, there is a fairly broad set of issues that at Electric Communities we came to refer to as “the containership problem”, concerning how to model one unum containing another when the una are hosted on separate machines, and especially how to deal with changes in the containership relation. For example, let’s say we want to take our teacup that’s sitting on the table and put it into a box that’s on the table next to it. Is that an operation on the teacup or on the box? If we have the teacup be authoritative about what its container is, it could conceivably teleport itself from one place to another, or insert itself into places it doesn’t belong. On the other hand, if we have the box be authoritative about what it contains, then it could claim to contain (or not contain) anything it decides it wants. Obviously there needs to be some kind of handshake between the two (or between the three, if what we’re doing is moving an unum from one container to another, since both containers may have an interest — or among the two or three and whatever entity is initiating the change of containership, since that entity too may have something to say about things), but what form that handshake takes leads to a research program probably worthy of being somebody’s PhD thesis project.

Setting aside some of these more exotic possibilities for a moment, we have found the unum pattern to be a powerful and effective tool for implementing virtual worlds and lots of other applications that have some kind of world-like flavor, which, once you start looking at things through a world builder’s lens, is a fairly diverse lot, including smart contracts, multi-party negotiations, auctions, chat systems, presentation and conferencing systems, and, of course, all kinds of multiplayer games. And if you dig into some of the weirder things that we never had the chance to get into in too much depth, I think you have a rich universe of possibilities that is still ripe for exploration.

May 1, 2019

Another Thing Found While Packing to Move

Getting ready to move has turned up all kinds of lost treasures. Here’s a publicity photo of the original Habitat programming team, taken next to a storage shed at Skywalker Ranch in 1987:

There were a couple of other developers who coded bits and pieces, but these four are the ones who lived and breathed the project full time for almost three years.

I particularly like this picture because it’s the only one I have that includes Janet Hunter. Janet was the main Habitat developer at QuantumLink. I think we shot this during one of Janet’s rare visits out west, since she was based in the Washington DC area where QuantumLink was. She wrote most of the non-game-specific parts of the original Habitat server and set the architectural pattern for nearly all the servers I’ve implemented since then.

It’s hard to believe I was ever that young, that thin, or had that much hair.

March 9, 2019

A Lost Treasure of Xanadu

Some years ago I found the cover sheet to a lost Xanadu architecture document, which I turned into this blog post for your amusement. Several people commented to me at the time that they wished they could see the whole document it was attached to. Alas, it appeared to have vanished forever.

Last weekend I found it! I turned up a copy of the complete document while sorting through old crap in preparation for having to move in the next few months. Now that I’ve found it I’m putting it online so it can get hoovered up by the internet hive mind. This is the paradox of the internet — nothing is permanent and nothing ever goes away.

This is a document I wrote in early 1984 at the behest of the System Development Foundation as part of Xanadu’s quest for funding. It is a detailed explanation of the Xanadu architecture, its core data structures, and the theory that underlies those data structures, along with a (really quite laughable) project plan for completing the system.

At the time, we regarded all the internal details of how Xanadu worked as deep and dark trade secrets, mostly because in that pre- open source era we were stupid about intellectual property. As a consequence of this foolish secretive stance, it was never widely circulated and subsequently disappeared into the archives, apparently lost for all time. Until today!

What I found was a bound printout, which I’ve scanned and OCR’d. The quality of the OCR is not 100% wonderful, but as far as I know no vestige of the original electronic form remains, so this is what we’ve got. I’ve applied minimal editing, aside from removing a section containing personal information about several of the people in the project, in the interest of those folks’ privacy.

Anyone so inclined is quite welcome, indeed encouraged, to attempt a better conversion to a more suitable format. I’d do that myself but I really don’t have the time at the moment.

This should be of interest to anyone who is curious about the history of Project Xanadu or its technical particulars. I’m not sure where the data structures rank given the subsequent 35 or so years of advance in computer science, but I think it’s still possible there’s some genuinely groundbreaking stuff in there.

February 7, 2017

Open Source Lucasfilm’s Habitat Restoration Underway

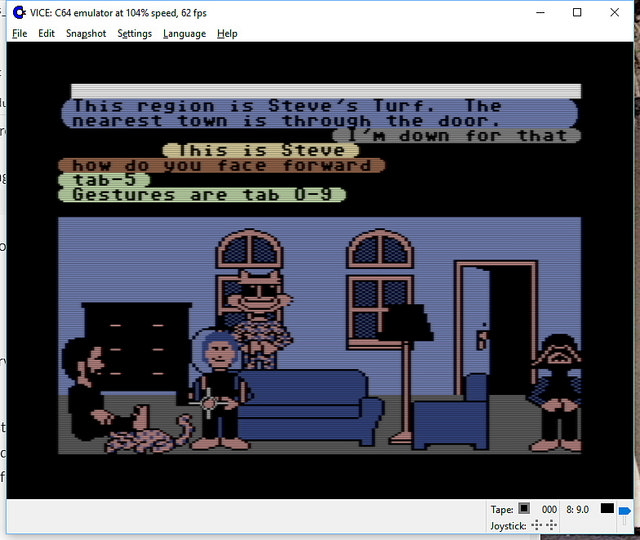

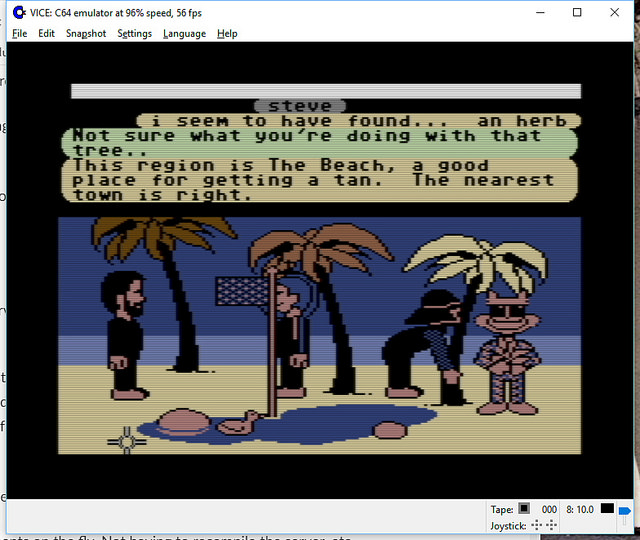

It’s all open source!

Yes – if you haven’t heard, we’ve got the core of the first graphical MMO/VW up and running and the project needs help with code, tools, doc, and world restoration.

I’m leading the effort, with Chip leading the underlying modern server: the Elko project – the Nth generation gaming server, still implementing the basic object model from the original game.

http://neohabitat.org is the root of it all.

http://slack.neohabitat.org to join the project team Slack.

http://github.com/frandallfarmer/neohabitat to fork the repo.

To contribute, you should be capable to use a shell, fork a repo, build it, and run it. Current developers use: shell, Eclipse, Vagrant, or Docker.

To get access to the demo server (not at all bullet proofed) join the project.

We’ve had people from around the world in there already! (See the photos)

http://neohabitat.org #opensource #c64 #themade

October 19, 2014

Map of The Habitat World

By now a lot of you may have heard about the initiative at Oakland’s Museum of Digital Arts & Entertainment to resurrect Habitat on the web using C64 emulators and vintage server hardware. If not, you can read more about it here (there’s also been a modest bit of coverage in the game press, for example at Wired, Joystiq, and Gamasutra).

Part of this effort has had me digging through my archives, looking at old source files to answer questions that people had and to refresh my own memory of how things worked. It’s been pretty nostalgic, actually. One of the cooler things I stumbled across was the Habitat world map, something which relatively few people have ever seen because when Habitat was finally released to the public it got rebranded (as “Club Caribe”) with an entirely different set of publicity materials. I had a big printout of this decorating my office at Skywalker Ranch and later at American Information Exchange, but not very many people will have been in either of those places. Now, however, thanks to the web, I can share it publicly for the first time.

We wanted to have a map because we thought we would need a plan for enlarging the world as the user population grew. The idea was to have a framework into which we could plug new population centers and new places for stories and adventures.

The specific map we ended up with came about because I was playing around writing code to generate plausible topographic surfaces using fractal techniques (and, of course, lots and lots and LOTS of random numbers). The little program I wrote to do this was quite a CPU hog, but I could run it on a bunch of different computers in parallel and combine the results (sort of like modern MapReduce techniques, only by hand!). One night I grabbed every Unix machine on the Lucasfilm network that I could lay my hands on (two or three Vax minicomputers and six or eight Sun workstations) and let the thing cook for an epic all-nighter of virtual die rolling. In the morning I was left with this awesome height field, in the form of a file containing a big matrix of altitude numbers. Then, of course, the question was what to do with it, and in particular, how to look at it. Remember that in those days, computers didn’t have much in the way of image display capability; everything was either low resolution or low color fidelity or both (the Pixar graphics guys had some high end display hardware, but I didn’t have access to it and anyway I’d have to write more code to do something with the file I had, which wasn’t in any kind of standard image format). Then I realized that we had these new Apple LaserWriter printers. Although they were 1-bit per pixel monochrome devices, they printed at 300 DPI, which meant you could get away with dithering for grayscale. And you fed stuff to them using PostScript, a newfangled Forth-like programming language. So I ordered Adobe’s book on PostScript and went to work.

I wrote a little C program that took my big height field and reduced it to a 500×100 image at 4 bits per pixel, and converted this to a file full of ASCII hexadecimal values. I then wrapped this in a little bit of custom PostScript that would interpret the hex dump as an image and print it, and voilá, out of the printer comes a lovely grayscale topographic map. Another little quick filter and I clipped all the topography below a reasonable altitude to establish “sea level”, and I had some pretty sweet looking landscape. At this point, you could make out a bunch of obvious geographic features, so we picked locations for cities, and drew some lines for roads between them, and suddenly it was a world. A little bit more PostScript hacking and I was able to actually draw nicely rendered roads and city labels directly on the map. Then I blew it up to a much larger size and printed it over several pages which I trimmed and taped together to yield a six and a half foot wide map suitable for posting on the wall.

As I was going through my archives in conjunction with the project to reboot Habitat, I encountered the original PostScript source for the map. I ran it through GhostScript and rendered it into a 22,800×4,560 pixel TIFF image which I could open in Photoshop and wallow around in. This immediately tempted me to do a bit more embellishment with Photoshop, so a little bit more hacking on the PostScript and I could split the various components of the image (the topographic relief, the roads, the city labels, etc.) into separate images which could then be individually manipulated as layers. I colorized the topography, put it through a Gaussian blur to reduce the chunkiness, and did a few other little bits of cosmetic tweaking, and the result is the image you see here (clicking on the picture will take you to a much larger version):

(Also, if you care to fiddle with this in other formats, the PostScript for the raw map can be gotten here. Beware that depending on what kind of configuration your browser has, your browser may just attempt to render the PostScript, which might not have exactly the results you want or expect. Have fun.)

There a number of interesting details here worth mentioning. Note that the Habitat world is cylindrical. This lets us encompass several different interesting storytelling possibilities: Going around the cylinder lets you circumnavigate the world; obviously, the first avatar to do this would be famous. The top edge is bounded by a wall, the bottom edge by a cliff. This means that you can fall of the edge of the world, or explore the wall for mysterious openings. By the way, the top edge is West. Habitat compasses point towards the West Pole, which was endlessly confusing for nearly everyone.

We had all kinds of plans for what to do with this, which obviously we never had a chance to follow through on. One of my favorites was the notion that if you walked along the top (west) wall enough, eventually you’d find a door, and if you went through this door you’d find yourself in a control room of some kind, with all kinds of control panels and switches and whatnot. What these switches would do would not be obvious, but in fact they’d control things like the lights and the day/night cycle in different parts of the world, the color palette in various places, the prices of things, etc. Also, each of the cities had a little backstory that explained its name and what kinds of things you might expect to find there. If I run across that document I’ll post it here too.

April 29, 2014

Troll Indulgences: Virtual Goods Patent Gutted [7,076,445]

Another terrible virtual currency/goods patent has been rightfully destroyed – this time in an unusual (but worthy) way: From Law360: EA, Zynga Beat Gametek Video Game Purchases Patent Suit, By Michael Lipkin

Another terrible virtual currency/goods patent has been rightfully destroyed – this time in an unusual (but worthy) way: From Law360: EA, Zynga Beat Gametek Video Game Purchases Patent Suit, By Michael Lipkin

Law360, Los Angeles (April 25, 2014, 7:20 PM ET) — A California federal judge on Friday sided with Electronic Arts Inc., Zynga Inc. and two other video game companies, agreeing to toss a series of Gametek LLC suits accusing them of infringing its patent on in-game purchases because the patent covers an abstract idea. … “Despite the presumption that every issued patent is valid, this appears to be the rare case in which the defendants have met their burden at the pleadings stage to show by clear and convincing evidence that the ’445 patent claims an unpatentable abstract idea,” the opinion said.

The very first thing I thought when I saw this patent was: “Indulgences! They’re suing for Indulgences? The prior art goes back centuries!” It wasn’t much of a stretch, given the text of the patent contains this little fragment (which refers to the image at the head of this post):

Alternatively, in an illustrative non-computing application of the present invention, organizations or institutions may elect to offer and monetize non-computing environment features and/or elements (e.g. pay for the right to drive above the speed limit) by charging participating users fees for these environment features and/or elements.

WTF? Looks like reasoning something along those lines was used to nuke this stinker out of existence. It is quite unusual for a patent to be tossed out in court. Usually the invalidation process has to take a separate track, as it has with other cases I’ve helped with, such as The Word Balloon Patent. I’m very glad to see this happen – not just for the defendant, but for the industry as a whole. Just adding “on a computer [network]” to existing abstract processes doesn’t make them intellectual property! Hopefully this precedent will help kill other bad cases in the pipeline already…

December 19, 2013

Audio version of classic “BlockChat” post is up!

On the Social Media Clarity Podcast, we’re trying a new rotational format for episodes: “Stories from the Vault” – and the inaugural tale is a reading of the May 2007 post The Untold History of Toontown’s SpeedChat (or BlockChattm from Disney finally arrives)

Link to podcast episode page[sc_embed_player fileurl=”http://traffic.libsyn.com/socialmediaclarity/138068-disney-s-hercworld-toontown-and-blockchat-tm-s01e08.mp3″]

August 2, 2013

Armed and Dangerous

[This is a repost from my long-dead Yahoo 360 blog, originally posted August 2006 about events in spring 2002. I decided to recover this posting from the Internet Archive because recent events, 12 years after 9/11, show that the authorities are STILL over-panicking about our security.]

How could I know that singing “Man of Constant Sorrow” in public could be considered a terrorist weapon?

One early spring evening in 2002 I went for a walk in my neighborhood wearing my FDNY September 11th Memorial T-Shirt (shown above), telling my family that I would return just after sundown (about 30 minutes).

About an hour and a half later I arrived at home teasing them by explaining that I’d “ just been handcuffed, interrogated, searched, had a machine gun pointed directly at me, been ordered to my knees two feet from a K-9 gnashing it’s teeth, and was nearly arrested as a terrorist … all just for singing out loud.”

My family didn’t believe me at first – until I showed them the reddened cuff marks on my wrists and the business card of PAPD Sergeant, Sandra Brown.

Now they wanted to hear the whole story…

One mild spring evening in 2002, I felt like singing. I wanted to teach myself some bluegrass and spirituals that I’d discovered recently (mostly as the result of recently seeing O Brother Where Art Thou?) and I felt like being real loud. So, rather than disturb by family, I decided to go for a walk and practice elsewhere. Given the weather, I’d only need a tshirt and jeans to keep me warm until well past sundown. I started singing right away when I got outside, but then noticed some of my neighbors, so I thought that it’d be better if I could find a place to belt out my baritone/bass tones where no one would care if I were in tune. I was practicing, after all.

“The pedestrian walkway over 101 would be perfect”, I thought, “with any luck I’ll be completely drowned out.”

I’d made good time hiking to the pedestrian overpass, humming “Ahhhh am a maaaaan, of con-stant sah-roooow…” along the way. By the time I reached the apex of the passage, the sun was very low in the west dropping just below the hills. The gold-purple sky was an inspirational sight. The constant breeze from the cars whizzing by below was quite effective in carrying my voice away, so I cranked up the volume. I was having a great time and expanded my material to include my favorite Webber show tunes. Other than a pair of guys walking by, my only audience was the late evening commuters most of who had just turned on their headlights. It was a blast. For 15 minutes I was able to belt out anything I wanted, as loud as I could.

When I was starting to feel the effects of singing continuously that loudly the sun had completely set, so I decided to head home. I was running a little later than I’d expected, so I increased my gait a just bit.

As my stride increased (mostly due to gravity) on my way down the sloped ramp back into the neighborhood, directly in front of me appeared two Palo Alto police officers who had just started their way up the ramp. Just a moment after I noticed them, they noticed me, and then did something very, very, strange. They quickly walked backward away from me until they were out of sight, around the corner, at the base of the ramp. I’d never seen anyone do anything like that before. How on earth could I intimidate two police officers just by walking down a pedestrian ramp? As I proceeded down to the exit I called out loud: “HELLO? Is everything alright?”

As I came to the bottom and walked around the corner there were about a half dozen of Palo Alto’s finest, one with what looked like an M-16 and others with pistols pointed directly at me. There was much yelling and I see and hear a dog barking threateningly – “Don’t move!” “Turn Around!” “Get Down!” “Put your hands where we can see them!” “Bark! Bark! [Jangling of a large dog chain.]”

I wasted no time at all, I put my hands in the air and turned my back to them. I kneeled, quickly enough that it hurt. “I think there’s been some mistake, whatever you do, please don’t let go of that dog” is all I could think to say at the moment. I had no idea what the heck was going on, but I didn’t want to give them any reason to make a horrible mistake.

“Who are you?” “Where are you from?” “What are you doing here?” “What are you carrying?” were the rapid-fire questions I can remember. I quickly explained that I was on a walk, singing songs. “The only thing I’m carrying is my wallet, which shows I live two blocks from here”, I said, still kneeling, I didn’t even have my house keys. “Take it out and toss it on the ground, but move very slowly”, said a woman who seemed to be in command of situation, She was to my left, but still behind me where I couldn’t see her. Very, very cautiously, I complied. “Do you have anything else?”, the request was rather urgent and sounded specific. “No. Nothing.”

An officer came up and handcuffed my wrists behind my back, aggressively patted me down, and helped me to my feet. My wallet was retrieved the commander-woman. Once I could face the squad again, I clearly recognized her as Sandra Brown, an officer who had done many hours as a bicycle-beat cop in the downtown Palo Alto area, where my family had spent nearly every Friday evening for nearly 14 years. I was hoping that this meant she might recognize me as well, helping to diffuse whatever this horrible mess was all about.

She walked me over to the back of her police cruiser, pressing me back on the trunk hard enough that my handcuffed wrists were pressed into the car metal enough to let me know that I wasn’t going to be going anywhere without her permission. She grabbed the walky-talky that I hadn’t previously noticed had been set on the roof of the car and spoke into it “(muffled) check in. Anything?”. I couldn’t make out the response, but the meaning was made clear to me immediately when she asked:

“Did you go all the way across the overpass?” “No.”

“Did you see anyone else up there?” “Just two guys that walked by about 20 minutes ago. Nothing unusual.”

“Where did you put it?” “Put what? I didn’t have anything.”

“Did you leave behind any clothing” “Clothing? What? No.”

Fifteen to twenty minutes passed. Officer Brown checked my ID and confirmed that I’m local. She noticed my shirt for the first time. The cuffs were starting to hurt. I’d been told to be quiet. The sturdy, but small blond woman with the assault rifle was keeping it at-the-ready, but it isn’t pointing at me. The dog had stopped barking, but was at some kind of station-keeping pose. Lots more radio traffic. I finally piece together that at least two officers were on the other side of the ramp are looking for something, something that they think I might have hidden there, something critical to this situation.

Finally, the invisible officers at the other end of the radio apparently gave up the search. My heart stopped racing. My temperature started to drop. You see, I finally stopped thinking that I’m likely to end up wounded or dead due to someone panicking.

Once the search is over, it became clear that maybe the situation was not what they had expected/feared. Officer Brown started to explain: “We got a phone call from someone on a cel-phone driving on 101 reporting a sniper, wearing a trench coat, was shooting at cars with a high-powered rifle or machine gun.” Apparently this triggered the Palo Alto equivalent of the swat team.

I couldn’t resist: “An overweight middle-aged man, singing the lead from The Phantom of the Opera (probably waving his arms about, crooning to Christine about being ‘inside her mind’), while wearing jeans and a tshirt that reads All Gave Some, Some Gave All on the back, somehow looks like a Columbine kid terrorizing the freeway with an automatic weapon? What irony: Wear a public-safety-supporting tshirt, get suspected of being a sniper.”. This observation did get a bit of a giggle out of the one with the real Tommy gun, finally hanging peacefully at her side.

I was feeling a little put out: “One call with such a vague description gets this level of response? Did 9/11 really turn us all into people looking for a terrorist behind every darkened corner? A trench coat? This is pretty unbelievable.” I was starting to get very sore about my wrist pain. “We’re sorry, we need to be extra cautious in situations such as these, if it had turned out to be true… In any case, you’ll have a great story to tell your kids and grandkids.”

“True. Can I get out of these now?” There were a few more rounds on the radio, getting a final approval to release me. Rubbing my wrists I share, “You know, my family will never believe me when I tell them that this happened. Do you have one of those Palo Alto Officer trading cards our kids got at school a few years ago?” Turned out that they were out of print, but Officer Brown did have a standard issue business card, which she gave me as they wished me well and I started walking home. [I know I still have it around here somewhere.]

Other than practicing the first of many tellings of this story on the way home, I have never forgotten that the fear generated by the terrorist attacks on 9/11 had changed our world forever. I don’t think that driver would have ever made such are report if this had all occurred one year earlier.

Fortunately for me, the police still are trained to get things right before they themselves start shooting reported terrorists.

“I am a man of constant sorrow. I’ve seen trouble – all my days.”

March 23, 2011

SM Pioneers: Farmer & Morningstar – How Gamers Made us More Social

Shel Israel has just posted @Global Neighbourhoods the latest in his series of posts from his upcoming book Pioneers of Social Media – which includes an interview with us about our contributions over the last 30+ years…

How Gamers Made us More Social

Many of us often overlook the role that games have played in creating social media. They provided much of the technology that we use today, not to mention a certain attitude. Of greatest importance, is that it was on games that people started socializing with each other in large numbers, online and in public. It was in games that people started to self-organize to get complex jobs accomplished.

We had people meeting and sharing and talking and performing tasks several years before we even had the Worldwide Web.

We’re honored to be amongst those highlighted. Shel says about 100 folks will be included. There won’t be enough pages, but we eagerly look forward to the result none-the-less.