Posts filed under "Lessons Learned"

August 5, 2021

February 7, 2017

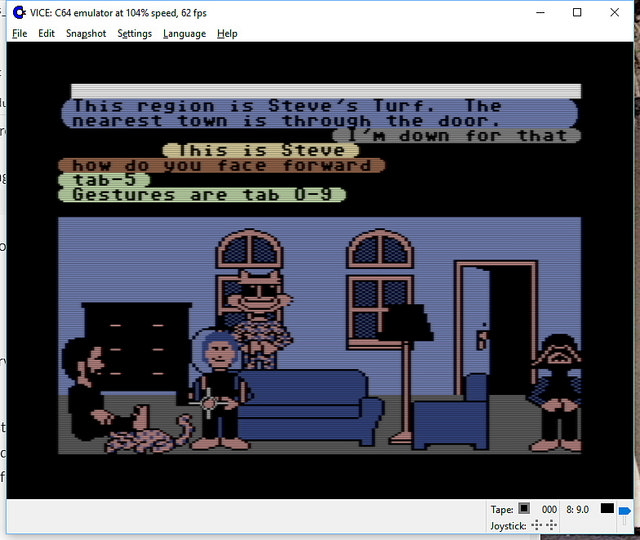

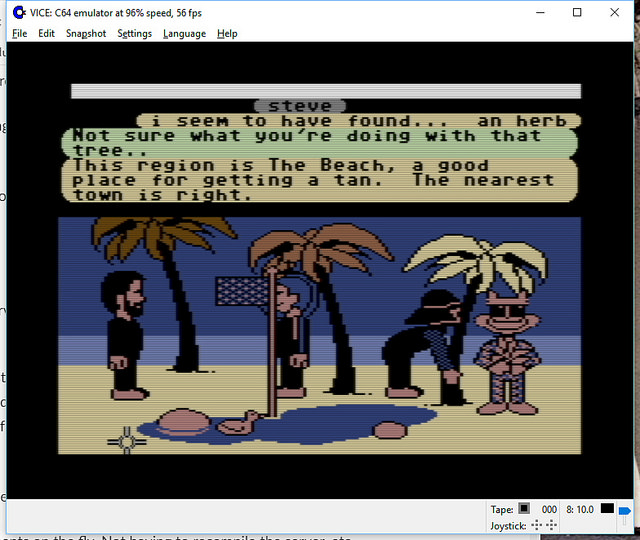

Open Source Lucasfilm’s Habitat Restoration Underway

It’s all open source!

Yes – if you haven’t heard, we’ve got the core of the first graphical MMO/VW up and running and the project needs help with code, tools, doc, and world restoration.

I’m leading the effort, with Chip leading the underlying modern server: the Elko project – the Nth generation gaming server, still implementing the basic object model from the original game.

http://neohabitat.org is the root of it all.

http://slack.neohabitat.org to join the project team Slack.

http://github.com/frandallfarmer/neohabitat to fork the repo.

To contribute, you should be capable to use a shell, fork a repo, build it, and run it. Current developers use: shell, Eclipse, Vagrant, or Docker.

To get access to the demo server (not at all bullet proofed) join the project.

We’ve had people from around the world in there already! (See the photos)

http://neohabitat.org #opensource #c64 #themade

October 27, 2014

The Bureaucratic Failure Mode Pattern

When we try to take purposeful action within an organization (or even in our lives more generally), we often find ourselves blocked or slowed by various bits of seemingly unrelated process that must first be satisfied before we are allowed to move forward. Some of these were put in place very deliberately, while others just grew more or less organically, but what they often have in common, aside from increasing the friction of activity, is that they seem disconnected from our ultimate purpose. If I want to drive my car to work, having to register my car with the DMV seems like a mechanically unnecessary step (regardless of what the real underlying reason for it may be).

Note that I’m not talking about the intrinsic difficulty or inconvenience of the process itself (car registration might entail waiting around for several hours in the DMV office or it might be 30 seconds online with a web page, for example), but the cost imposed by the mere existence of the need to report information or get permission or put things in some particular way just so or align or coordinate with some other thing (and the concomitant need to know that you are supposed to do whatever it is, and the need to know or find out how). Each of these is a friction factor; the competence or user-friendliness of whatever necessary procedure is involved may influence the magnitude of the inconvenience, but not the fact of it. (Other recursive friction factors embedded in the organizations or processes behind these things may well figure into why many of them are in fact incompetently executed or needlessly complex or time consuming, but that is a separate matter.)

Over time, organizations tend to acquire these bits of process, the way ships accumulate barnacles, with the accompanying increase in drag that makes forward progress increasingly difficult and expensive. However, barnacles are purely parasitic. They attach themselves to the hull for their own benefit, while the ship gains nothing of value in return. But even though organizational cynics enjoy characterizing these bits of process as also being purely parasitic, each of those bits of operational friction was usually put there for some purpose, presumably a purpose of some value. It may be that the cost-benefit analysis involved was flawed, but the intent was generally positive. (I’m ignoring here for a moment those things that were put in place for malicious reasons or to deliberately impede one person’s actions for the benefit of someone else. These kinds of counter-productive interventions do happen from time to time, and while they tend to loom large in people’s institutional mythologies, I believe such evil behavior is actually comparatively rare – perhaps not that uncommon in absolute terms, but still dwarfed by the truly vast number of ordinary, well-intentioned process elements that slow us down every day.)

Because I’m analyzing this from a premise of benign intent, I’m going to avoid characterizing these things with a loaded word like “barnacles”, even though they often have a similar effect. Instead, let’s refer to them as “checkpoints” – gates or control points or tests that you have to pass in order to move forward. They are annoying and progress-impeding but not necessarily valueless.

We are forced to pass through checkpoints all the time – having to swipe your badge past a reader to get into the office (or having to unlock the door to your own home, for that matter), entering a user name and password dozens of times per day to access various network services, getting approval from your boss to take a vacation day, having to fill out an expense report form (with receipts!) to get reimbursed for expenses you have incurred, all of the various layers of review and approval to push a software change into production, having to get approval from someone in the legal department before you can adopt a new piece of open source software; the list is potentially endless.

Note that while these vary wildly in terms of how much drag they introduce, for many of them the actual amount is very little, and this is a key point. The vast majority of these were motivated by some real world problem that called for some tiny addition to the process flow to prevent (or at least inhibit) whatever the problem was from happening again. No doubt some were the result of bad dealing or of an underemployed lawyer or administrator trying to preempt something purely hypothetical, but I think these latter kinds of checkpoint are the exception, and we weaken our campaign to reduce friction by paying too much attention to them – that is, by focusing too much on the unjustified bureaucracy, we distract attention from the far larger (and therefore far more problematic) volume of justified bureaucracy.

Let’s just presume, for the purpose of argument, that each of the checkpoints that we encounter is actually well motivated: that it exists for a reason, that the reason can be clearly articulated, that the reason is real, that it is more or less objective, that people, when presented with the argument for the checkpoint, will find it basically convincing. Let’s further presume that the friction imposed by the checkpoint is relatively modest – that the friction that results is not because the checkpoint is badly implemented but simply because it is there. And yes, I am trying, for purposes of argument, to cast things in a light that is as favorable to the checkpoints as possible. The reason I’m being so kind hearted towards them is because I think that, even given the most generous concessions to process, we still have a problem: the “death of a thousand cuts” phenomenon.

Checkpoints tend to accumulate over time. Organizations usually start out simple and only introduce new checkpoints as problems are encountered – most checkpoints are the product of actual experience. Checkpoints tend to accumulate with scale. As an organization grows, it finds itself doing each particular operation it does more often, which means that the frequency of actually encountering any particular low probability problem goes up. As an organization grows, it finds itself doing a greater variety of things, and this variety in turn implies greater variety of opportunities to encounter whole new species of problems. Both of these kinds of scale-driven problem sources motivate the introduction of additional checkpoints. What’s more, the greater variety of activities also means a greater number of permutations and combinations of activities that can be problematic when they interact with each other.

Checkpoints, once in place, tend to be sticky – they tend not to go away. Partly this is because if the checkpoint is successful at addressing its motivating problem, it’s hard to tell if the problem later ceases to exist – either way you don’t see it. In general, it is much easier for organizations to start doing things than it is for them to stop doing things.

The problem with checkpoints is their cumulative cost. In part, this is because the small cost of each makes them seductive. If the cost of checkpoint A is close to zero, it is not too painful, and there is little motivation or, really, little actual reason to do anything about it. Unfortunately, this same logic applies to checkpoint B, and to checkpoint C, and indeed to all of them. But the sum of a large number of values near zero is not necessarily itself a value near zero. It can, instead, be very large indeed. However, as we stipulated in our premises above, each one of them is individually justified and defensible. It is merely their aggregate that is indefensible – there is nothing to tell you, “here, this one, this is the problem” because there isn’t any one which is the problem. The problem is an emergent phenomenon.

Any specific checkpoint may be one that you encounter only rarely, or perhaps only once. Consider, for example, all the various procedures we make new hires go through. When you hit such a checkpoint, it may be tedious and annoying, but once you’ve passed it it’s done with. Thereafter you really have no incentive at all to do anything about it, because you’ll never encounter it again. But if we make a large number of people each go through it once, there’s still a large multiplier, and we’ve still burdened our organization with the cumulative cost.

A problem of particular note is that, because checkpoints tend to be specialized, they are often individually not well known. Plus, a larger total number of checkpoints increases the odds in general that you will encounter checkpoints that are unknown or mysterious to you, even if they are well known to others. Thus it becomes easy for somebody without the relevant specialized knowledge to get into trouble by violating a rule that they didn’t even know to exist.

Unknown or poorly understood checkpoints increase friction disproportionately. They trigger various kinds of remedial responses from the organization, in the form of compliance monitoring, mandatory training sessions, emailed warning messages and other notices that everyone has to read, and so on. Each such checkpoint thus generates a whole new set of additional checkpoints, meaning that the cumulative frictions multiply instead of just adding.

Violation of a checkpoint may visit sanctions or punishment on the transgressor, even if the transgression was inadvertent. The threat of this makes the environment more hostile. It trains people to be become more timid and risk averse. It encourages them to limit their actions to those areas where they are confident they know all the rules, lest they step on some unfamiliar procedural landmine, thus making the organization more insular and inflexible. It gives people incentives to spend their time and effort on defensive measures at the expense of forward progress.

When I worked at Electric Communities, we had (as most companies do) a bulletin board in our break room where we displayed all the various mandatory notices required by a profusion of different government agencies, including arms of the federal government, three states (though we were a California company, we had employees who commuted from Arizona and Oregon and so we were subject to some of those states’ rules too), a couple of different regional agencies, and the City of Cupertino. I called it The Wall Of Bureaucracy. At one point I counted 34 different such notices (and employees, of course, were expected to read them, hence the requirement that they be posted in a prominent, common location, though of course I suspect few people actually bothered). If you are required to post one notice, it’s pretty easy to know that you are in compliance: either you posted it or you didn’t. But if you are required to post 34 different notices, it’s nearly impossible to know that the number shouldn’t be 35 or 36 or that some of the ones you have are out of date or otherwise mistaken. Until, of course, some government inspector from some agency you never heard from before happens to wander in and issue you a citation and a fine (and often accuse you of being a bad person while they’re at it). As Alan Perlis once said, talking about programming, “If you have a procedure with ten parameters, you probably missed some.”

In the extreme case, the cumulative costs of all the checkpoints within an organization can exceed the working resources the organization has available, and forward progress becomes impossible. When this happens, the organization generally dies. From an external perspective – from the outside, or even from one part of the organization looking at another – this appears insane and self-destructive, but from the local perspective governing any particular piece of it, it all makes sense and so nothing is done to fix it until the inexorable laws of arithmetic put a stop to the whole thing. A famous example of this was Atari, where by 1984 the combined scleroses effecting the product development process became so extreme that no significant new products were able to make it out the door because the decision making and approval process managed to kill them all before they could ship, even though a vast quantity of time and money and effort was spent on developing products, many of them with great potential. Few organizations manage to achieve this kind of epic self-absorption, though some do seem to approach it as an asymptote (e.g., General Motors). In practice, however, what seems to keep the problem under control, here in Silicon Valley anyway, is that the organization reaches a level of dysfunction where it is no longer able to compete effectively and it is supplanted in the marketplace by nimbler and generally younger rivals whose sclerosis is not as advanced.

The challenge, of course, is how to deal with this problem. The most common pathway, as alluded to above, is for a newer organization to supplant the older one. This works, not because the one organization is intrinsically more immune to the phenomenon than the other but simply due to the fact that because it is younger and smaller it has not yet developed as many internal checkpoints. From the perspective of society, this is a fine way of handling things; this is Schumpeter’s “creative destruction” at work. It is less fine from the perspective of the people whose money or lives are invested in the organization being creatively destroyed.

Another path out of the dilemma is strong leadership that is prepared to ride roughshod over the sound justifications supporting all these checkpoints and simply do away with them by fiat. Leaders like this will disregard the relevant constituencies and just cut, even if crudely. Such leaders also tend to be authoritarian, megalomaniacal, visionary, insensitive, and arguably insane – and, disturbingly often, right – i.e., they are Steve Jobs. They also tend to be a bit rough on their subordinates. This kind of willingness to disrespect procedure can also sometimes be engendered by dire necessity, enabling even the most hidebound bureaucracies to manifest surprising bursts of speed and effectiveness. A well known and much studied example of this phenomenon is the military, ordinarily among the stuffiest and most procedure bound of institutions, which can become radically more effective in times of actual war. In the first three weeks of American involvement in World War II, when we weren’t yet really doing anything serious, Army Chief of Staff George Marshall merely started carefully asking people questions and half the generals in the US Army found themselves retired or otherwise displaced.

A more user-friendly way to approach the problem is to foster an institutional culture that sees the avoidance of checkpoints as a value unto itself. This is very hard to do, and I am hard pressed to think of any examples of organizations that have managed to do this consistently over the long term. Even in the short term, examples are few, and tend to be smaller organizations embedded within much larger, more traditional ones. Examples might include Bell Labs during AT&T’s pre-breakup years, Xerox PARC during its heyday, the Lucasfilm Computer Division during the early 1980s, or the early years of the Apollo program. Each of these examples, by the way, benefited from a generous surplus of externally provided resources, which allowed them to trade a substantial amount of resource inefficiency for effective productivity. Surplus resources, however, tend also to engender actual parasitism, which ultimately ends the golden age, as all these examples attest.

The foregoing was expressed in terms of people and organizations, but essentially the same analysis applies almost without modification to software systems. Each of the myriad little inefficiencies, rough edges, performance draining extra steps, needless added layers of indirection, and bits of accumulated cruft that plague mature software is like an organizational checkpoint.

December 19, 2013

Audio version of classic “BlockChat” post is up!

On the Social Media Clarity Podcast, we’re trying a new rotational format for episodes: “Stories from the Vault” – and the inaugural tale is a reading of the May 2007 post The Untold History of Toontown’s SpeedChat (or BlockChattm from Disney finally arrives)

Link to podcast episode page[sc_embed_player fileurl=”http://traffic.libsyn.com/socialmediaclarity/138068-disney-s-hercworld-toontown-and-blockchat-tm-s01e08.mp3″]

August 26, 2013

Randy’s Got a Podcast: Social Media Clarity

I’ve teamed up with Bryce Glass and Marc Smith to create a podcast – here’s the link and the blurb:

Social Media Clarity – 15 minutes of concentrated analysis and advice about social media in platform and product design.

First episode contents:

News: Rumor – Facebook is about to limit 3rd party app access to user data!

Topic: What is a social network, why should a product designer care, and where do you get one?

Tip: NodeXL – Instant Social Network Analysis

August 23, 2013

Patents and Software and Trials, Oh My! An Inventor’s View

What does almost 20 years of software patents yield? You’d be surprised!

I gave an Ignite talk (5 minutes: 20 slides advancing every 15 seconds) entitled

“Patents and Software and Trials, Oh My! An Inventor’s View”

Here’s some improved links…

-

I’ve created ip-reform.org to support the “I Won’t Sign Bogus Patents” pledge.

-

Encourage your company to adopt Twitter’s Inventor’s Patent Agreement

-

Support the The EFF on Patent Reform – DefendInnovation.org has a proposal

-

Sequestration has delayed a bay area PTO office, support this bill

I gave the talk twice, and the second version is also available (shows me giving the talk and static versions of my slides…) – watch that here:

July 25, 2012

Forward looking statements

People say things to other people all the time that are misinterpreted or misunderstood; this is a normal part of life as a social animal. But this is especially true of things people say about the future, what the securities business calls “forward looking statements”. Statements about the future are marvelous sources of chaos and confusion because the future is intrinsically uncertain. The inevitable divergence between what someone said at one time and what actually happened at a later time invites all kinds of reinterpretation and second guessing and finger pointing, well beyond the usual muddle that is an ordinary part of human social interaction.

Because people in an organization are trying to coordinate purposeful and often complex tasks over time, forward looking statements make up a large fraction of intra-organizational communications, a larger fraction than I think is typical in purely social or familial interactions. Over the years I’ve learned that I often have to train people I’m working with on the distinction between three related but very different kinds of forward looking statements: plans, predictions, and promises. In my experience, somebody treating one of these as one of the others can be a significant generator of interpersonal discord and organizational dysfunction.

In particular we make a lot of these kinds of statements to people to whom we are in some way accountable, such as managers and executives up the chain of command, but also, notably, investors. We also make these kinds of statements to peers and subordinates, but somehow I’ve found that the most chaotic and damaging effects of misunderstandings about what something really meant tend to happen when communicating upward in a power relationship. Consequently, reinforcing a clear understanding of these distinctions has become part of my standard routine for breaking in new bosses.

The distinctions are subtle, but important:

- Plans are about intention

- Predictions are about expectation

- Promises are about commitment

A plan is a prospective guide to action. A plan can be wrong (moreover, it can be known to be wrong) and yet be still useful. A plan is often an approximation or even a wild guess. However, if you are in a high state of ignorance and yet trying to take purposeful action, you have to start somewhere. As George Patton famously said, “A good plan, violently executed now, is better than a perfect plan next week.” Plans can readily change, because over time you learn things, particularly as a side effect of trying to execute the plan itself. In fact, in my line of work, if your plans aren’t changing relatively frequently you’re probably doing something wrong. Plans typically concern matters that are within your own sphere of control: “first I will do this, then I will do that”.

A prediction is declaration about what you think will happen. A prediction may very well encompass elements that are beyond your control. A prediction will often incorporate, if only implicitly, some model or theory or idea you have about how some part of the world works. When making a prediction, you are offering somebody else the benefit of your knowledge and analysis, so it can be beneficial to articulate your reasons for believing as you do. Like plans, predictions can change, but the reasons for change are different: a prediction can change if external facts change, or if you discover some shortcoming in your analysis. Thus it may also be important to be explicit and articulate when you change a prediction: explain why. Unlike a plan, a prediction that is just a wild guess is largely worthless, though a prediction that is the product of an inarticulable intuition may still be useful (but if so, in some sense it’s not really a wild guess — though it’s a valuable and rare skill to be able to reliably distinguish the times you are going with your gut from the times you are just stabbing in the dark).

A promise is a statement that you grant other people the right to treat as a fact that they can rely on, as they figure out their own actions and make plans, predictions, and promises of their own. A promise is a positive assertion that you will or will not do something specific. A promise is not something that generally changes; a promise is either kept or not kept. A change in circumstances may render a promise unkeepable or inappropriate, however. People put a lot of moral weight on promises, because accepting a promise requires trust. Because trust is involved, a broken promise can have emotional and organizational consequences that go beyond the direct practical effects of whatever contrary thing was or was not done. I could go on at length about the moral and emotional dimensions, but it would be a digression right now. The short version is: promises carry a lot of baggage.

On their face, these three kinds of things are all simply declarations about the future, and there’s nothing innate that necessarily marks which of these a given statement is: “I will mow the lawn tomorrow” could legitimately be taken as any one of the three. The differences have to do not with the form of the statement but with the intent. The reason the distinctions remain important, however, is because serious trouble can result when somebody says something intended as one of these categories, and somebody else interprets it as one of the others. The reasons for this sort of misinterpretation are varied and probably infinite: the person who said it was unclear what they meant, the person who heard it wasn’t paying attention, or misunderstood, or had different background assumptions, or was simply clueless. Sometimes the misinterpretation is deliberate and willful; this is especially destructive.

These categories are not pure. That is, a single statement is not necessarily 100% one of these things and 0% either of the others. A statement can be a mixture. However, having the parties at both ends of the communication be clear on what was intended is still essential.

There are many different ways trouble can result from interpreting a statement of one of these types as one of the others.

Treating a plan as if it were a prediction invites confusion and mayhem if the plan changes. The normal evolution of a project can be seen as evidence of problems where none actually exist: “You said you were going to do A and instead you did B. Why did you say you were going to do A? Do you really know what you are doing? Please explain.” A lot of time and energy can be dissipated accounting for changes to people for whom the changes weren’t actually important.

During the last year of the Habitat project, we reached the point were the product was fundamentally complete but it had a lot of bugs. The bug list became our main planning tool: each bug was assigned a priority and a rough time estimate, and the bug list was the thing that each developer looked to to decide what to do next. I call it a bug list, but not everything on it was, strictly speaking, a bug. Some things were tasks that we’d like to get done that needed to be balanced against the debugging work, and other things were just stuff that could be made better if we spent the time or resources. Since the world is constantly changing and we are constantly learning, a fairly common pattern was for a task to be identified and put on the list, and then gradually drift into some form of irrelevance as the shape of the system evolved or operational experience gave us feedback about what was really important. This kind of drift and accompanying deprioritization is a process that every developer should be familiar with.

Perversely, we found ourselves keeping multiple uncoordinated bug lists. As the project matured we acquired a product manager, who was a well intentioned but ultimately useless detail freak. In an attempt to track the status of the project, in hopes of answering management’s eternal question, “when is it going to be done?”, she’d convene status meetings wherein she’d try to use the bug list as checklist. Every couple of days we’d spend several hours going through these items, and all the dross that we’d been ignoring because it was irrelevant or pointless became a topic for discussion, and “never mind that” was never an acceptable way to dispose of these items. Her reasoning was that if something had been important enough to get put on the list, it shouldn’t be taken off without due consideration. Since she wasn’t the person doing the work and so didn’t understand a lot of the particulars, everything had to be argued and debated and explained, wasting many hours of time. Plus, she’d be adding up all the time estimates for these random and vague things and freaking out because the total was wildly unreasonable — never mind that the estimates were engineers’ guesses to begin with and many of these tasks would never be done anyway. And on top of all that, a lot of these status meetings were teleconferences with our partners at QuantumLink, where each of these irrelevant items got unfolded into even more useless discussion and became the basis for lots of interorganizational dispute. So we found ourselves developing the defensive habit of keeping private todo lists of tasks we’d identified that we didn’t want to have to spend hours debating, and everybody made up their own plan.

The consequence of all of this was that a whole lot of planning activity was taking place off the books, so when the work got done it meant that lots of resources were spent on things that never showed up in the official project plan and could not be accounted for. It also meant that each of us had much fuzzier than necessary picture of what the others were doing, and management had a worse picture than that.

Nearly every experienced developer I know has his or her own variation of this story. Many of us have several.

A plan that is treated as a promise is even worse than one treated as a prediction. A normal change of plan can become an invitation to recrimination or outright hostility or even punishment. Plans treated as promises are at the root of many of the most awful cases of organization dysfunction I’ve ever experienced.

One of the projects I worked on at Yahoo! (to protect the guilty I will refrain from naming names) actually kept two schedules: the official schedule, for showing to upper management (the promise), and the real schedule, for day to day use by the people doing the work (the plan). As the project evolved, these two diverged ever more sharply, until the picture that upper management was getting became a complete and utter fantasy. At one level, the problem was that the person running the project was a craven coward, afraid of telling the truth to his superiors because he knew they wouldn’t like it (the real schedule said that things were going to take a lot longer than the Potemkin schedule said — funny how it never seems to go the other way). But at another level, the deeper problem was that the higher echelon people persisted in treating any forward looking statement by their subordinates as a promise, which made planning impossible.

Treating a prediction as a promise holds someone responsible for the consequences of their analysis rather than for the quality of the analysis itself. Even if someone has some control over whether a prediction comes true or not, the mere act of making a prediction should not carry with it the obligation to intervene to ensure the outcome. Many predictions are conditional, statements of the form “if A happens then B will happen”; this does not mean that someone who says this is now committed to making A happen. Indeed, as with plans, changes in circumstances can render a prediction wrong or irrelevant. It may be more constructive to adapt to the changed reality than to try to bend reality just to preserve the prediction.

Treating a prediction as a promise often leads to people being held responsible for things they have no control over. Putting people in this sort of bind is a classic cause of various forms of mental illness. Aside from being basically useless and stupid, this is great a way to make people hate you, and you’d deserve it. Nevertheless, how many of us have experienced a boss refusing to hear that something can’t be done, even when it really couldn’t?

Treating a prediction as a promise abdicates responsibility. If you are obligated to produce some outcome and fail because some prediction you relied on turns out to have been wrong, it is still your fault. It was you who chose to rely on the prediction. Government and big business both do this all the time, trying to duck accountability for mismanagement or malfeasance by pointing at external estimates or projections gone wrong (indeed, at times it seems like the Congressional Budget Office was established principally to enable politicians to use this particular dodge).

The failure modes just discussed are the worst, because each, in one form or another, imputes causality that isn’t really there. The other possible category confusions can still be disruptive, however, by jumbling the mental models people use to make sense of the world.

If you treat someone’s promise or prediction as a plan, it means you are pretending they have a plan when they might not. You are confusing ends with means. Sometimes, of course, you don’t care what their plan is, and sometimes it’s none of your business anyway, but in such cases you should know that you are banking on the quality of their analysis or of their commitment, and not on a fantasy model of what they are doing.

If you treat someone’s promise as a prediction, you risk using the wrong grounds to assess the validity of their statement. You consider the trustworthiness of a prediction by looking at the predictor’s knowledge and analytic ability, whereas a promise is evaluated by looking at the promisor’s incentives and their ability to execute the relevant tasks. These two pathways to assessment are wildly different, and so if you use one when you should use the other you are in danger of getting the wrong answer.

There are already plenty enough ways for organizational relationships to go off the rails without adding the various nasty species of communications failures I’ve described here. However, I don’t think it’s sufficient to just exhort everyone to try to be clearer. Managers, in particular, need to be aware of these failure modes and press for clarity when somebody says something forward looking and the category it belongs in is uncertain. Because humans tend to like certitude, many managers have a bias towards interpreting the things people say as constraining the future more than they actually do. If they do this a lot, it teaches their subordinates to be stingy with their knowledge, timid in their public outlook, and even sometimes to lie defensively. All of these things are corrosive to success.

March 23, 2011

SM Pioneers: Farmer & Morningstar – How Gamers Made us More Social

Shel Israel has just posted @Global Neighbourhoods the latest in his series of posts from his upcoming book Pioneers of Social Media – which includes an interview with us about our contributions over the last 30+ years…

How Gamers Made us More Social

Many of us often overlook the role that games have played in creating social media. They provided much of the technology that we use today, not to mention a certain attitude. Of greatest importance, is that it was on games that people started socializing with each other in large numbers, online and in public. It was in games that people started to self-organize to get complex jobs accomplished.

We had people meeting and sharing and talking and performing tasks several years before we even had the Worldwide Web.

We’re honored to be amongst those highlighted. Shel says about 100 folks will be included. There won’t be enough pages, but we eagerly look forward to the result none-the-less.

January 15, 2011

Requiem for Blue Mars

Another virtual world startup (Blue Mars) is dying. At The Andromeda Media Group Blog Will Burns [Aeonix Aeon] writes:

Looking Back at the Future

The really interesting part about all of this is that in order to see the future of Avatar Reality, and subsequently Blue Mars (or any virtual environment today), we need not look into the future but instead look to the past…

[many interesting insights about 1990s era worlds]

In 1990, the solution was given by two people to all of this madness. Chip Morningstar and F. Randall Farmer, authors of Lessons Learned From Lucasfilm’s Habitat. Strangely enough I had asked Mr Farmer about Linden Lab and he informed me that he was actually called in as a consultant in the early days, and not surprisingly, ignored.

That’s a bit of a harsh summary, but more than five years ago I did write The Business of Social Avatar Virtual Worlds: Or, why I really like Second Life, even if their business is most likely doomed. Clearly I wasn’t 100% right about them – they found a business model that at least got them to cover their run-rate. But many of the things in there may to apply to Blue Mars…

November 16, 2010

Quora:What lessons of Social Web do you wish had been better integrated into Yahoo?

On Quora, an anonymous user asked me the following question:

In hindsight, what lessons have you learned from the Social Web that you wish you had been more successful at integrating into Yahoo before you were let go?

I considered this question at length when composing this reply – this is probably the most thought-provoking question I’ve been asked to publicly address in months.

If you read any of my blog posts (or my recent book), you already know that I’ve got a lot of opinions about how the Social Web works: I rant often about identity, reputation, karma, community management, social application design, and business models.

I did these same things during my time for and at Yahoo!

We invented/improved user-status sharing (what later became known as Facebook Newsfeeds) when we created Yahoo! 360° [Despite Facebook’s recently granted patent, we have prior art in the form of an earlier patent application and the evidence of an earlier public implementation.]

But 360 was prematurely abandoned in favor of a doomed-from-the-start experiment called Yahoo!Mash. It failed out of the gate because the idea was driven not by research, but personality. But we had hope in the form of the Yahoo! Open Strategy, which promised a new profile full of social media features, deeply integrated with other social sites from the very beginning. After a year of development – Surprise! – Yahoo! flubbed that implementation as well. In four attempts (Profiles, 360, Mash, YOS) they’d only had one marginal success (360), which they sabotaged several times by telling users over and over that the service was being shut down and replaced with inferior functionality. Game over for profiles.

We created a reputation platform and deployed successful reputation models in various places on Yahoo! to decrease operational costs and to identify the best content for search results and to be featured on property home pages [See: The Building Web Reputation Systems Wiki and search for Yahoo to read more.]

The process of integrating with the reputation platform required product management support, but almost immediately after my departure the platform was shipped off to Bangalore to be sunsetted. Ironically, since then the folks at Yahoo! are thinking about building a new reputation platform – since reputation is obviously important, and everyone from the original team has either left, been laid off, or moved on to other teams. Again, this will be the fourth implementation of a reputation platform…

Are you sensing a pattern yet?

Then there’s identity. The tripartite identity model I’ve blogged about was developed while at Yahoo an attempt to explain why it is brain-dead to ask users to reveal their IM name, their email address, and half their login credentials to spammers in order to leave a review of a hotel.

Again we built a massively scalable identity service platform to allow users to be seen as their nickname, age, and location instead of their YID. And again, Yahoo! failed to deploy properly. Despite a cross-company VP-level mandate, each individual business unit silo dragged their heels in doing the (non-trivial, but important and relatively easy) work of integrating the platform. Those BUs knew the truth of Yahoo! – if you delay long enough, any platform change will lose its support when the driving folks leave or are reassigned. So – most properties on Yahoo! are still displaying YIDs and getting up to 90% fewer user contributions as a result.

That’s what I learned: Yahoo! can’t innovate in Social Media. It has a long history in this, from Yahoo! Groups, which during my tenure had three separate web 2.0 re-designs, with each tossed on the floor in favor of cheap and easy (and useless) integrations (like with Yahoo! Answers) to Flickr, Upcoming, and Delicious. I’m sad to say, Yahoo! seems incapable of reprogramming its DNA, despite regular infusions of new blood. Each attempt ends in either an immune-response (Flickr has its own offices, and a fairly well known disdain for Sunnyvale) or assimilation and decreasing relevance (HotJobs, Personals, Groups, etc.).

So, in the end, I find I can’t answer the question. I was one of many people who tried to drive home the lessons of the social web for the entire time I was there. YOS (of which I helped spec in fall 2007) was the last attempt to reshape the company to be social through and through. But, it was a lost cause – the very structure of the environment is personality driven. When those personalities leave, their projects immediately get transferred to Bangalore for end-of-life support, just as much of YOS has been…

I don’t know what Yahoo! is anymore, but I know it isn’t inventing the future of social anything.

[As I sat through this years F8 developers conference, and listen to Mark Z describe 95% of the YOS design, almost 3 years later, I knew I’d have to write this missive one day. So thanks for the prodding , Anonymous @ Quora]

Randy Farmer

Social Media Consultant, MSB Associates

Former Community Strategy Analyst for Yahoo!

[Please direct comments to Quora]